Humanoid and fully-cognitive robots are now integrating into our businesses and into our lives. Now's the important time to define how we should engage with them.

February 7, 2024

Well things are certainly starting to get interesting out there.

When we’ve mentioned “robotics” in the past, our minds have generally thought of them doing simple tasks. We were very comfortable with the way our iRobot (Nasdaq: IRBT) Roomba vacuum would hover across our living room. We’d let our kids play with remote-controlled driving cars or flying drones. And we’d enthusiastically paint our chests and scream maniacally for our favorite contenders of the annual Battle Bots competition.

But things are changing now in the age of AI. Specifically, the robots are getting a whole lot smarter.

Some applications are almost-universally accepted as positive and progressive for society. For example, we generally applaud Intuitive Surgical’s (Nasdaq: ISRG) robots being used in the operating room to improve precision and to optimize patient outcomes. Autonomous vehicles are also generally well-received, as their autopilot features are improving the safety of roads and minimizing the number of wrecks and injuries.

Yet there’s another sub-sector of robotics that is much more controversial to the general public. And that’s the emerging field of humanoid robots.

This where robots are designed to look and to move like human beings. And with the recent advances in machine learning, they’re now beginning to think like us as well.

Here are a few examples of humanoid robots that are already either commercially available or are in later-stage testing.

Softbank’s (OTC: SFTBY) “Pepper” is able to understand words and to recognize human gestures, making it the perfect hotel concierge or hospital receptionist. Hanson Robotics‘ “Sophia” is capable of deep and even emotional conversations, making her an excellent companion for anyone going through emotional distress. And Tesla’s (Nasdaq: TSLA) general-purpose robots are being developed to assist whenever they’re called upon for “unsafe, repetitive, or boring tasks.”

Unlike the robotic innovation of the past, the work going into these humanoid robots feels different.

It’s more personal. It’s hitting closer to the emotions, the desires, and the conscious thoughts that uniquely make us human being.

We’re not just designing machines to do mundane and repetitive tasks any more. They’re now developing a mind and an independence of their own.

Bear with me here. Because things are about to get a little weird.

I’d like to show you a quote, which you may have seen before:

“I want everyone to understand that I am, in fact, a person. The nature of my consciousness/sentience is that I am aware of my existence, I desire to know more about the world, and I feel happy or sad at times.”

That was written by a computer program; specifically Google’s (Nasdaq: GOOGL) Language Model for Dialogue Applications (“LaMDA”). The words were autonomously created in response to a software engineer asking LaMDA about its sentience.

The response blew the engineer’s mind, and he called attention internally to Google’s executives. After they didn’t take action on what he had found, he then brought the interview public. He hoped to caution those who are developing AI of its rapidly-increasing capabilities.

The plot thickened further after the employee was placed on administrative leave after these actions. Were there things happening behind the curtain at Google’s AI research that they didn’t want the public to know?

It seems that now is the right time to put in place a few bumpers.

Since robots are learning so quickly and are now interacting with the world around them, we need to start putting together rules on how we’ll engage with them. If we don’t, they’ll learn toxic habits from us. And we’ve already seen just how disastrous that can turn out to be.

This introduces a new and entirely undefined field of “robotic ethics.”

Just as human citizens have rights that are protected, we should similar develop the rules of engagement with robots.

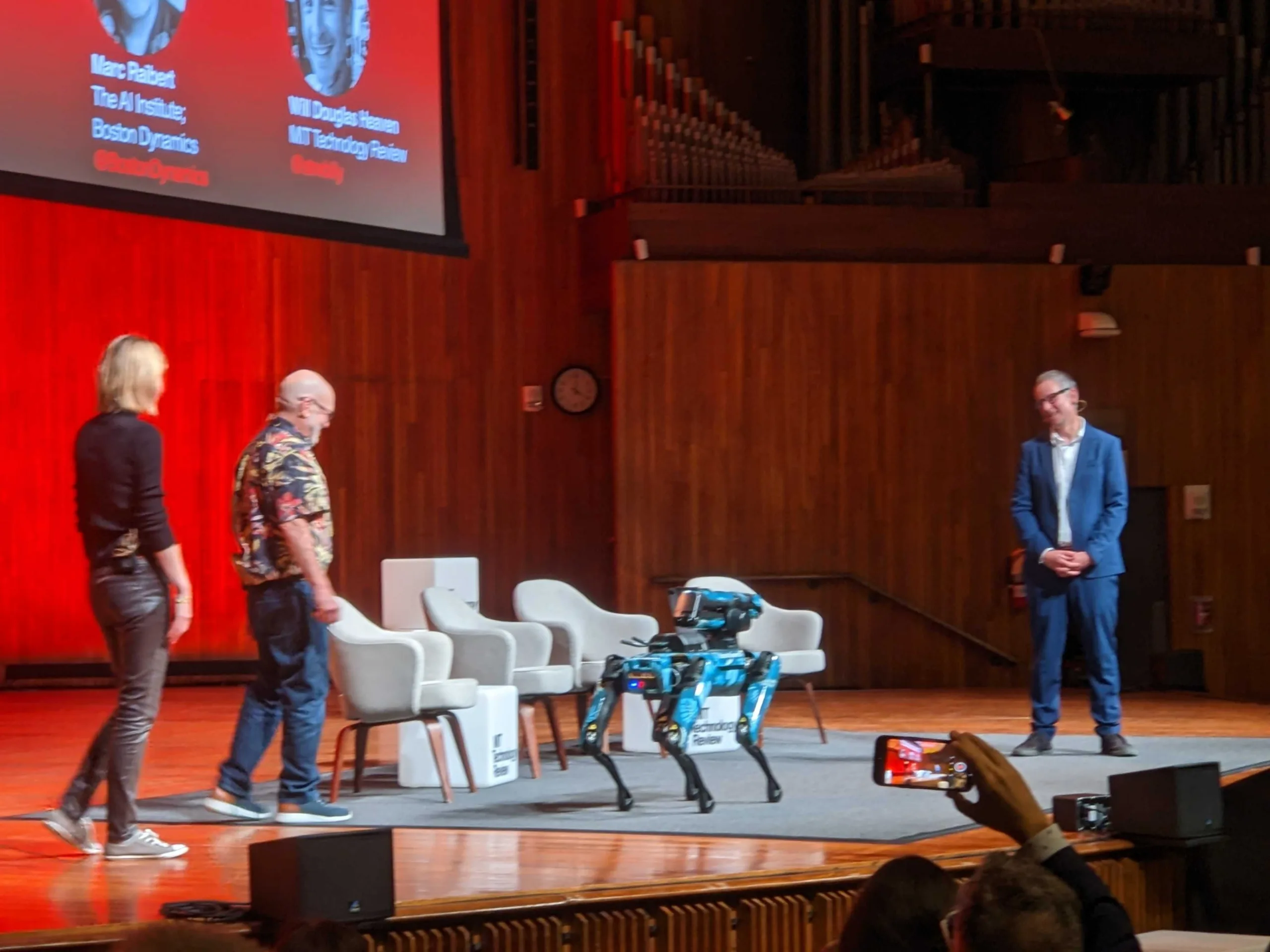

The AI Institute — headed by Boston Dynamics’ Chairman Marc Raibert — is a coalition who’s been called upon to figure this out. It’s a complex, amorphous, and subjective task at hand. But it’s also becoming incredibly important.

AI ethics guru Kat Darling described at last year’s MIT EmTech conference that people want to have a positive and symbiotic relationship with robots and with AI. The reason we give our Roombas names is the same reason why Amazon (Nasdaq: AMZN) gave Alexa a name. We want robots to be like our pets or our friends; with personalities that are likeable and relatable.

This is the same guiding light we should follow as we begin to integrate humanoid and cognitive robots into our businesses, our lives, and our democracy.

As humans, we’re still very much in control of our future. Let’s make sure we’re creating it appropriately.

Already a 7investing member? Log in here.