Free Preview

It's a race to develop the world's highest-performance AI accelerators. Will the Computing King continue to wear the AI crown?

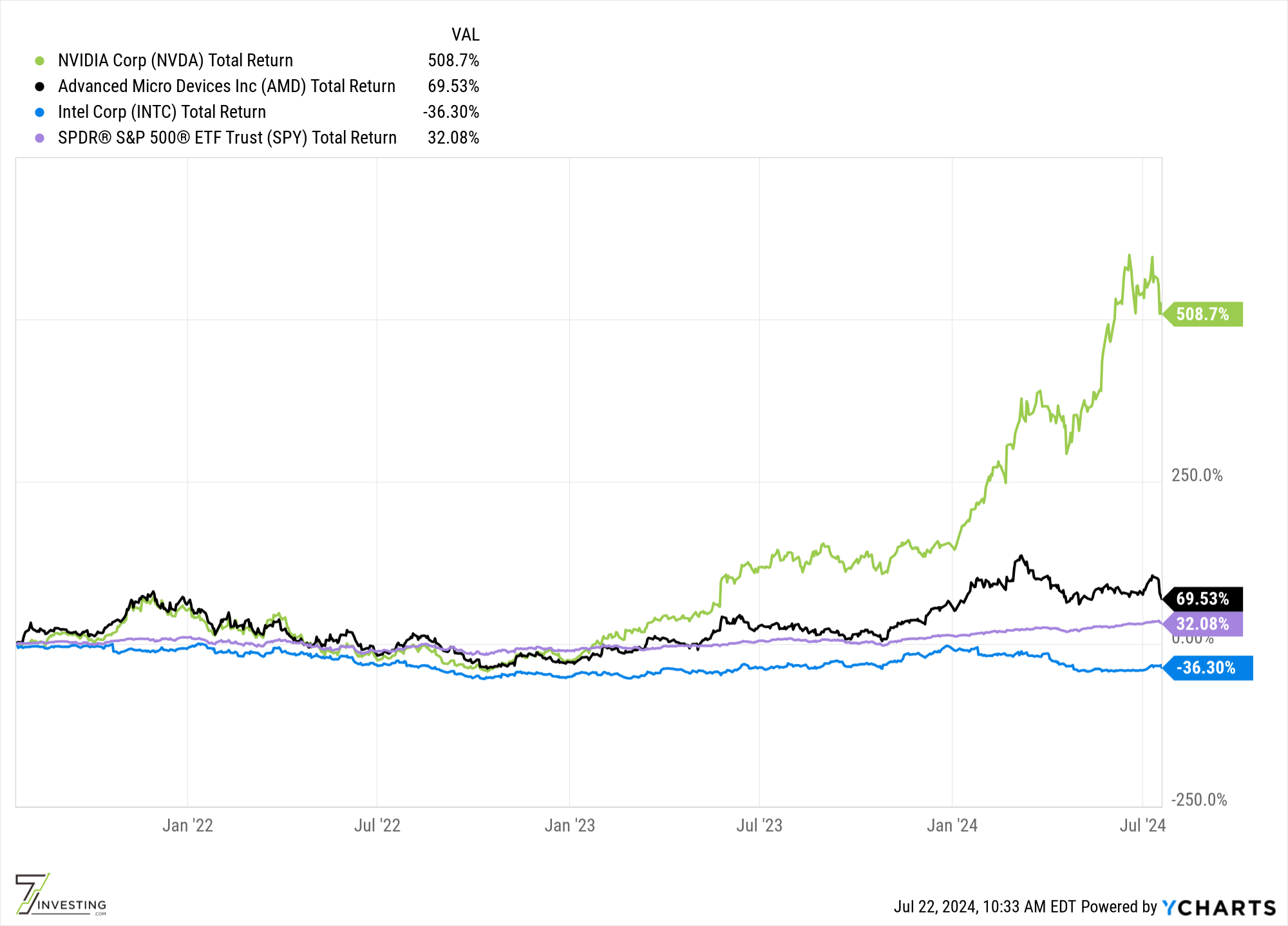

There’s no question in anyone’s mind that NVIDIA (Nasdaq: NVDA) has become the King of AI.

Its GPUs used as AI accelerators in the data center — specifically the high-power/high-performance H100 — have been the driver behind NVIDIA’s stock skyrocketing in recent years.

Yet the $3 trillion question is whether NVIDIA’s incredible sales growth can continue?

I believe the most logical answer is yes. There’s still plenty of AI-powered growth still on the horizon.

But there’s also nuance to that answer.

Where, When, and How

Rather than just providing “AI” as the answer to how NVIDIA will grow, it will also depend on how much computing will be needed and whose processors are actually providing it.

Today, the mass market is using AI for fun things like asking questions to OpenAI’s ChatGPT. The response to each question you ask GPT costs around 3 cents. And that’s based upon the computing done behind the scenes using NVIDIA’s H100 chips in Microsoft’s Azure data centers.

The enterprise world is similarly interested in AI, and large corporations like Meta Platforms (Nasdaq: META) and Tesla (Nasdaq: TSLA) are building their own custom AI chips to train and execute their own AI models using proprietary data. They’re demanding more in-depth and calibrated answers, which serve narrower use-cases and applications than the broader market. Last year Meta bought 150,000 H100 chips and Tesla bought 50,000, which incurred upfront costs (for the chips; not counting the future training or power costs) of $3 billion and $1 billion, respectively.

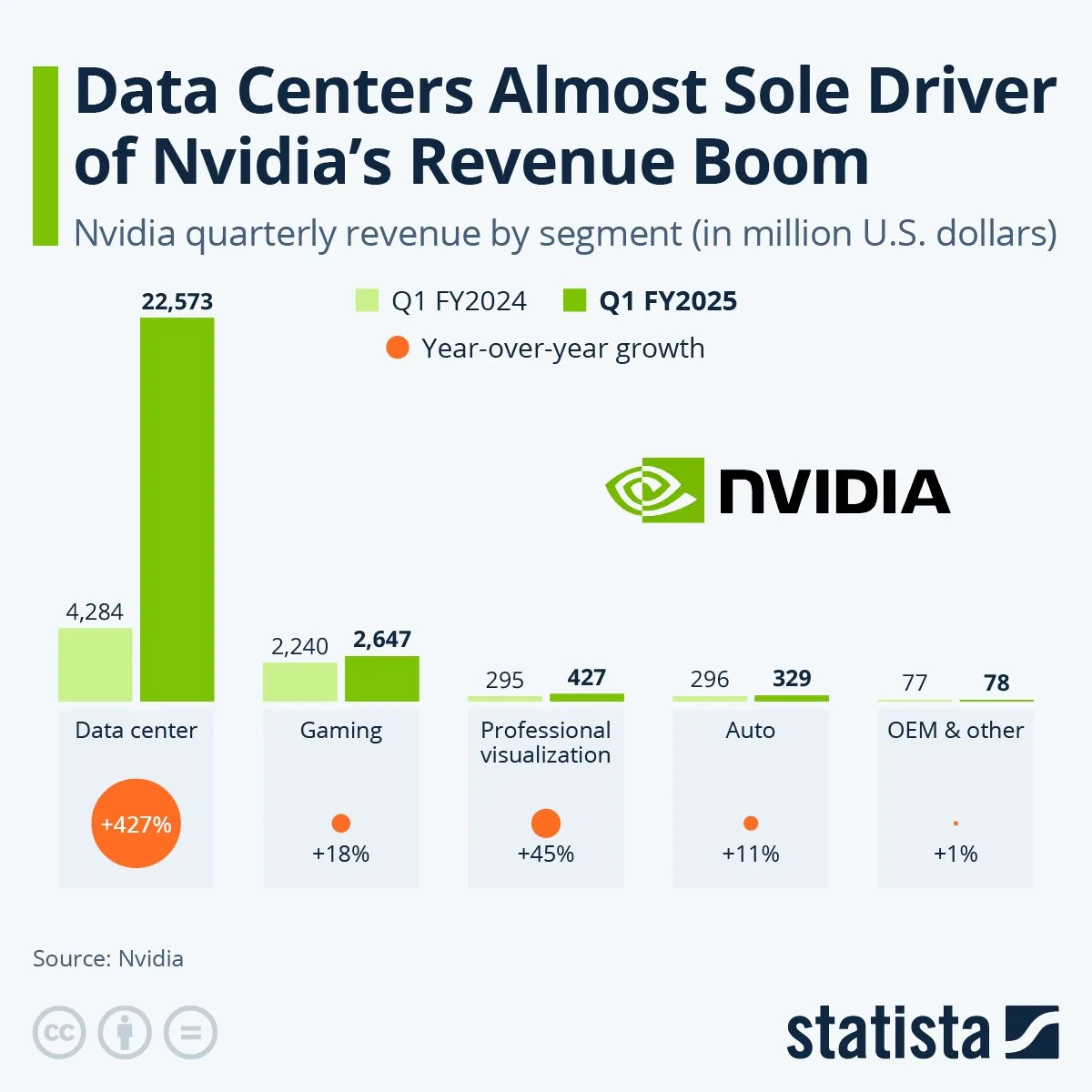

But that’s a drop in the bucket for NVIDIA, at least compared to what it’s selling to the IaaS cloud datacenters. NVIDIA sold $47 billion worth of GPUs to the data center last year. It’s Microsoft Azure, Google Cloud, and Amazon Web Services that are the real heavy hitters when it comes to buying high-performance processors.

Description of NVIDIA’s segment-specific growth rates. Source: Statista

Looking to The Future

So will these hyperscalers continue to keep spending more every year on NVIDIA’s chips? Or will they invest more heavily on getting their own custom AI processors closer to parity when it comes to performance?

I’m betting it’s the latter.

The gap is massive right now. NVIDIA’s computing performance per watt by far the best available in the entire industry.

But I expect that gap will narrow within the next few years. Especially when it comes to power consumption, since NVIDIA’s chips are so power-hungry.

Will the rising tide of AI be enough to lift NVIDIA’s stock to new highs? Or will the looming threat of competition mean make its massive future sales expectations unachievable?

That’s what I’ll be digging deeper into this week for 7investing. In the coming days, I’ll be building out a compete Discounted Cash Flow valuation model for NVIDIA. Similar to what I’ve done for Tesla, Coupang, Rocket Lab, and many others, I’ll be looking to pin down a price per share of what NVIDIA is fundamentally worth. I will share everything I find and the assumptions I have used with everyone on our free 7investing email list.

Right now this is NVIDIA’s market to win. But it also has everything to lose.

Stay tuned.